0 写在前面

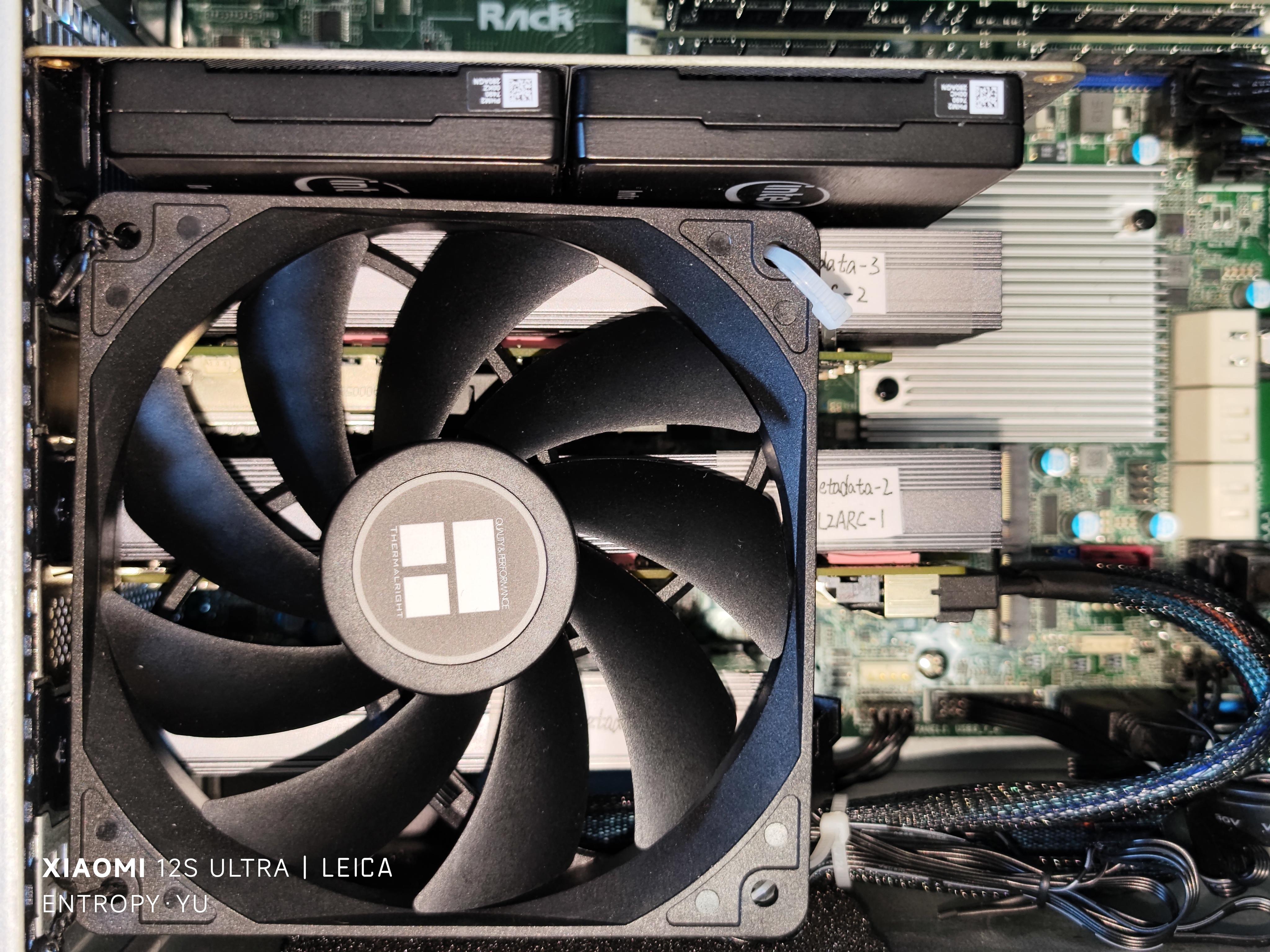

9月初笔者给朋友课题组设计了一套小集群,为了省钱,存储(NAS)部分是自行采购散件DIY的,组网也使用了二手硬件。NAS和交换机从下单散件到组装、调试完毕花了10天,原计划等到集群部署完成后综合起来写一篇帖子分享出来,但期间出现了一些问题,计算节点迟迟没有到货,故先把NAS部分单独发出来。

1 简介

采购这些散件已过去一个多月,行情有很大变化,因此价格仅供参考。

如果追求极致省钱,网卡也可以选用同为CX3 Pro芯片的拆机HP544+FLR。

18TB HDD没有选希捷EXOS X18,因为在Backblaze近几年的硬盘故障报告中,希捷EXOS大容量氦气HDD故障率远高于从HGST继承来的WDC Ultrastar系列。建议读者自行搜索Backblaze的报告。

在PCIe Gen 3平台上使用PCIe Gen 4 SSD是因为看中了致态7100的耐久度(推荐看此视频:https://www.bilibili.com/video/BV19a4y137p3)。

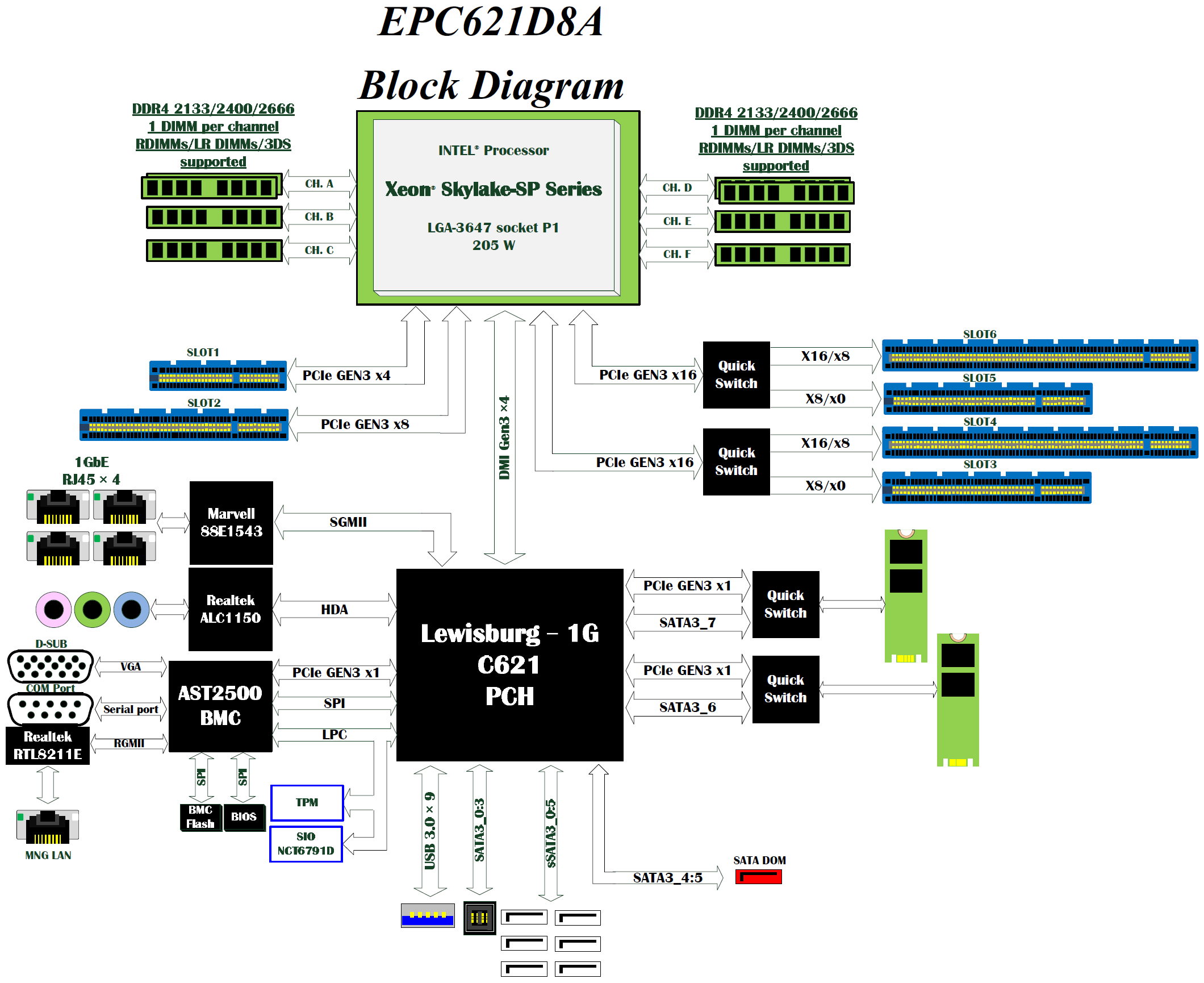

这套方案刚好耗尽了LGA 3647平台上从CPU直接引出的44+4条PCIe Lane,对强迫症很友好。

比较遗憾的是该存储没有冗余电源,拉低了整个系统的可用性。

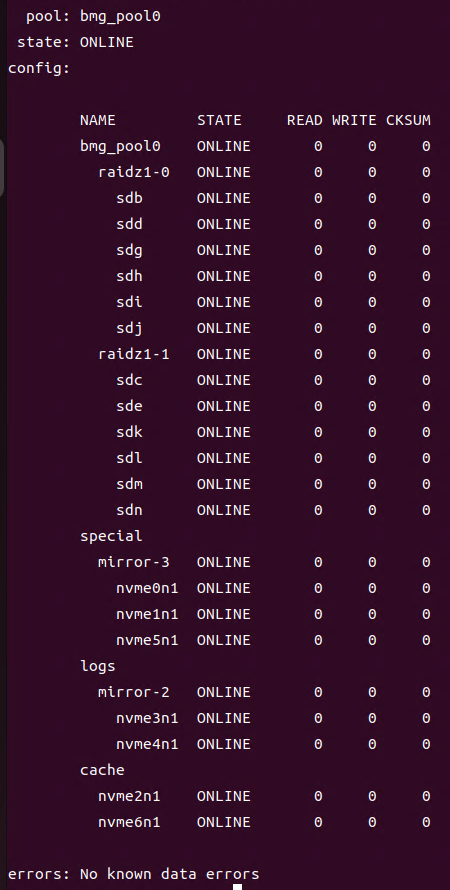

存储系统的基本环境是Ubuntu Server + OpenZFS + NFS over RDMA (RoCE),没有直接采用TrueNAS Scale,因为目前此系统不支持RoCE。用上了ZFS中所有有助于提升IO性能的特性,包括ARC、L2ARC (Cache) VDEV、ZIL (SLOG) VDEV以及Metadata (Special) VDEV。其中,ARC是192GB RAM,Cache VDEV是2块致态7100 1TB 2-way stripe,SLOG VDEV是2块Intel傲腾900P 280GB 2-way mirror,Special VDEV是3块致态7100 1TB 3-way mirror。

Data pool的策略是2组6盘RAID Z1 VDEV。由于所购买硬盘是同一生产批次的,故分别对每个RAID Z1 VDEV中的1块HDD进行“老化”,这样做可以在一定程度上防止VDEV中多块HDD同时故障。笔者所采用的“老化”手段是插到自己的服务器上运行一天4K随机性能测试。

交换机没有RoCE所需的关键特性,因此整个存储网络并不是严格意义上的RDMA网络,但后续的实测表明,仅在服务端和客户端主机上启用RDMA,并使用NFS over RDMA挂载共享目录,存储的随机读写性能也明显高于普通以太网方案。

交换机是某大厂自行定制的(即“白牌交换机”),刚到手时没有任何技术资料,噪音极大,且卖家称其仅支持40G和10G速率,不支持其他速率,建议当作傻瓜交换机使用。笔者不信邪,依靠个人经验和直觉断断续续摸索了几天,实现了LACP链路聚合、风扇转速调节、更改端口速率。期间还不慎把交换机折腾变砖了一次,经过研究,进入系统shell修复了配置文件。后来笔者把这些经验都手把手传授给了卖家。

2 安装过程照片

▼机箱正面

▼机箱侧面

▼机箱背面

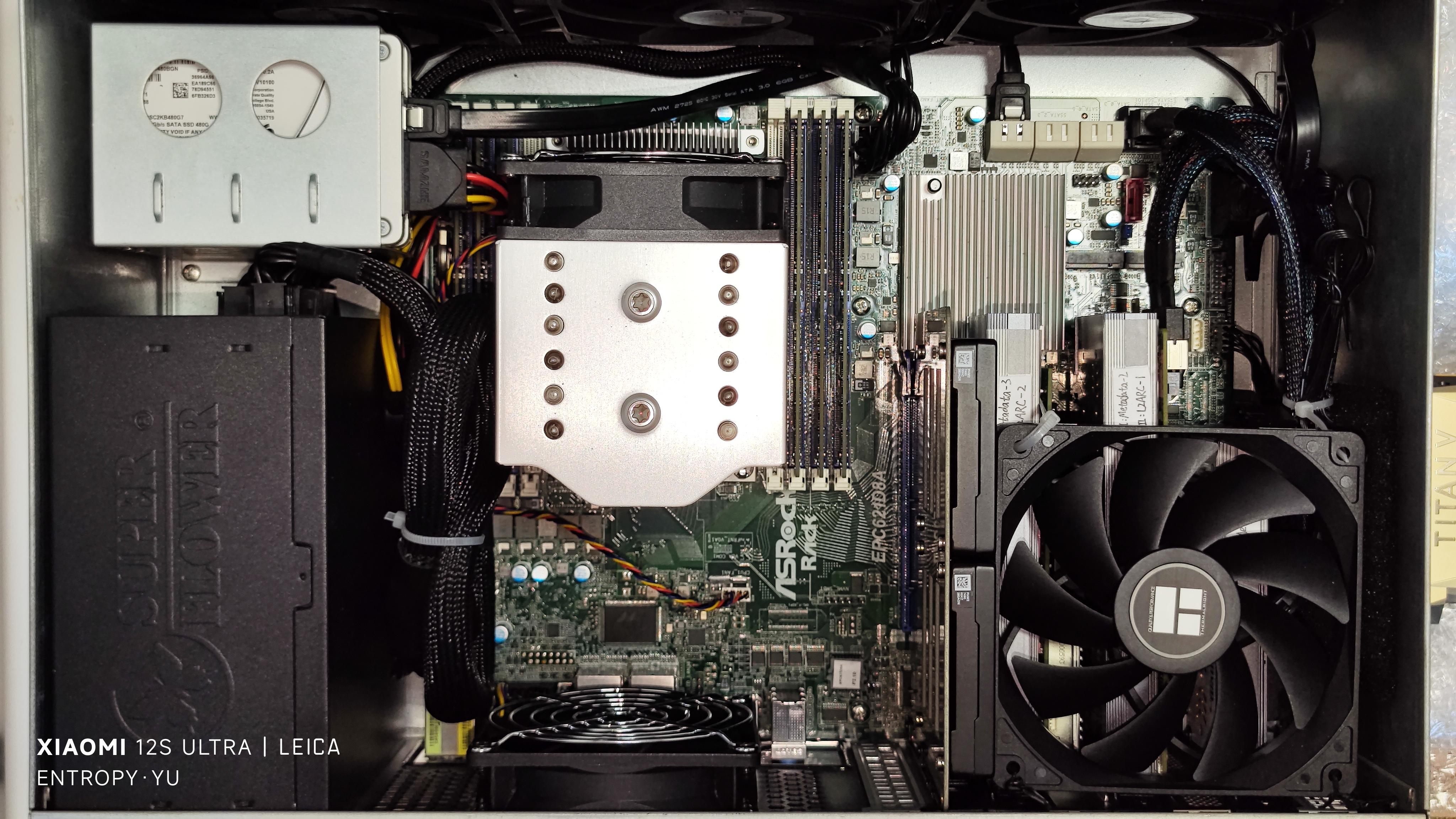

▼CPU、内存

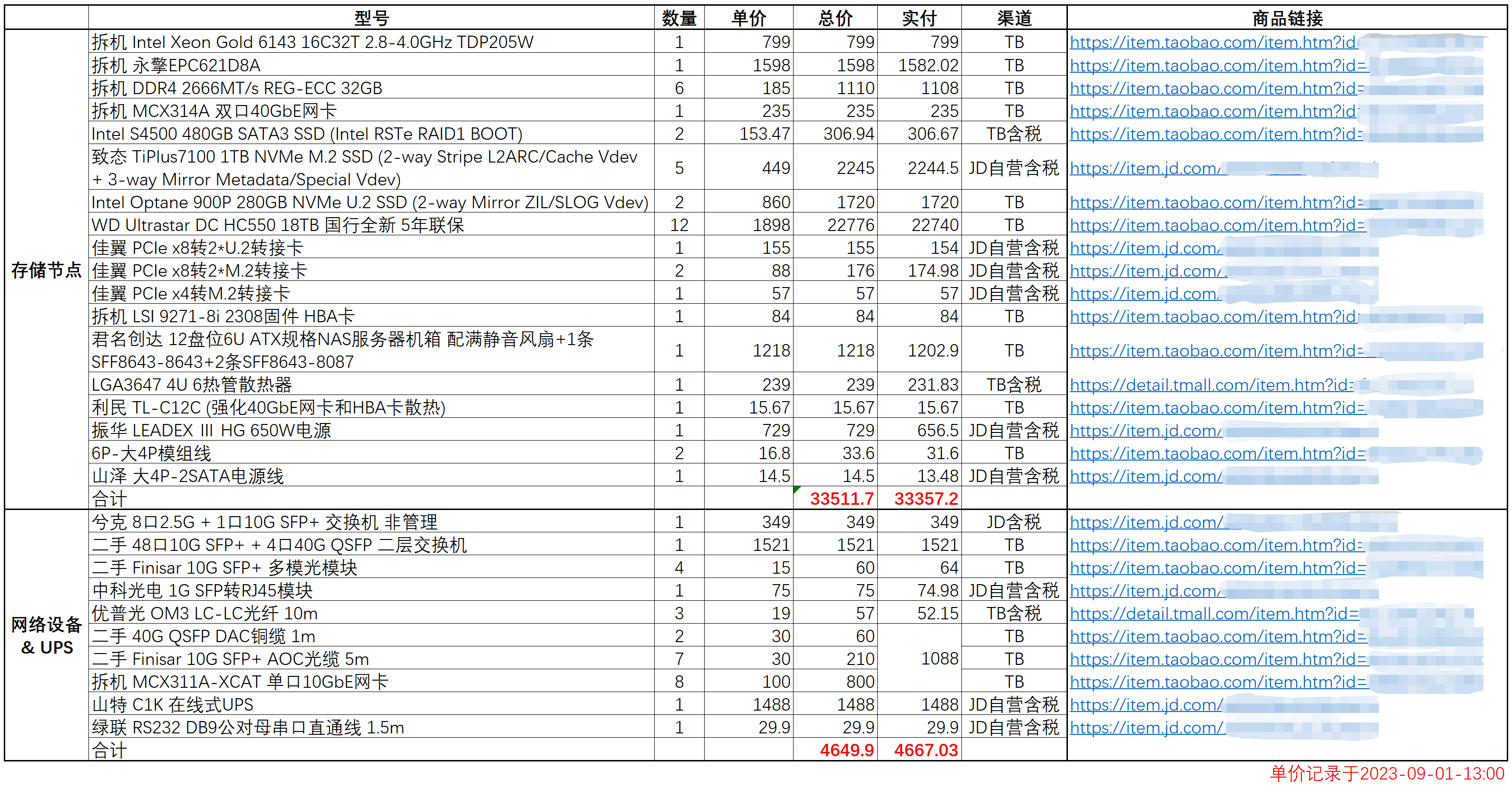

▼安装CPU

▼主板

▼三件套

▼一堆致态7100 & 转接卡

▼傲腾900P

▼傲腾900P - U.2转接卡

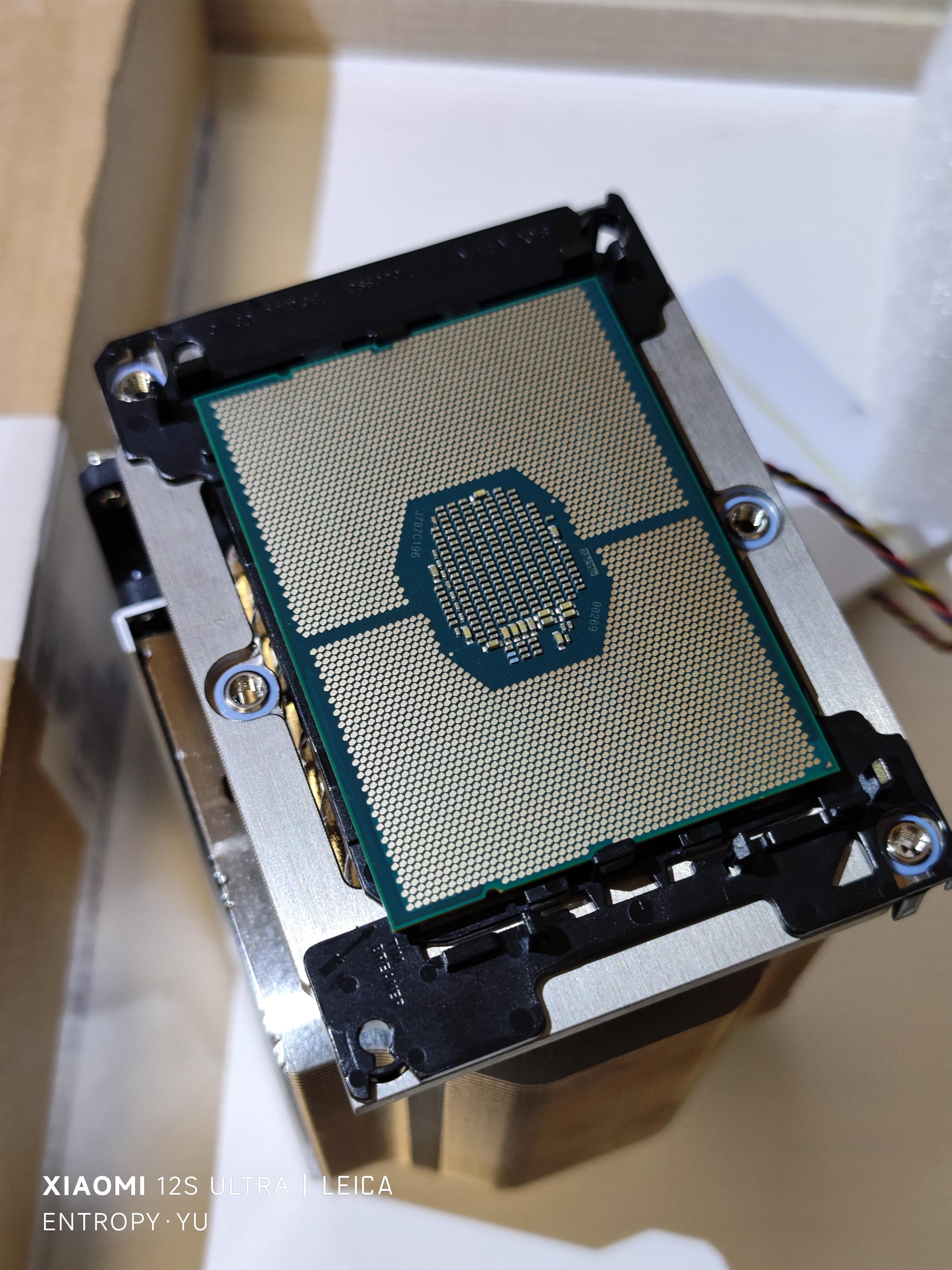

▼一堆硬盘包装盒

▼一堆18TB HDD,贴了标签以便区分,“I”指的是连接到主板SFF-8643接口上的HDD,“E”指的是连接到HBA卡SFF-8087接口上的HDD

▼古董级HBA卡

▼古董级40G网卡MCX341A

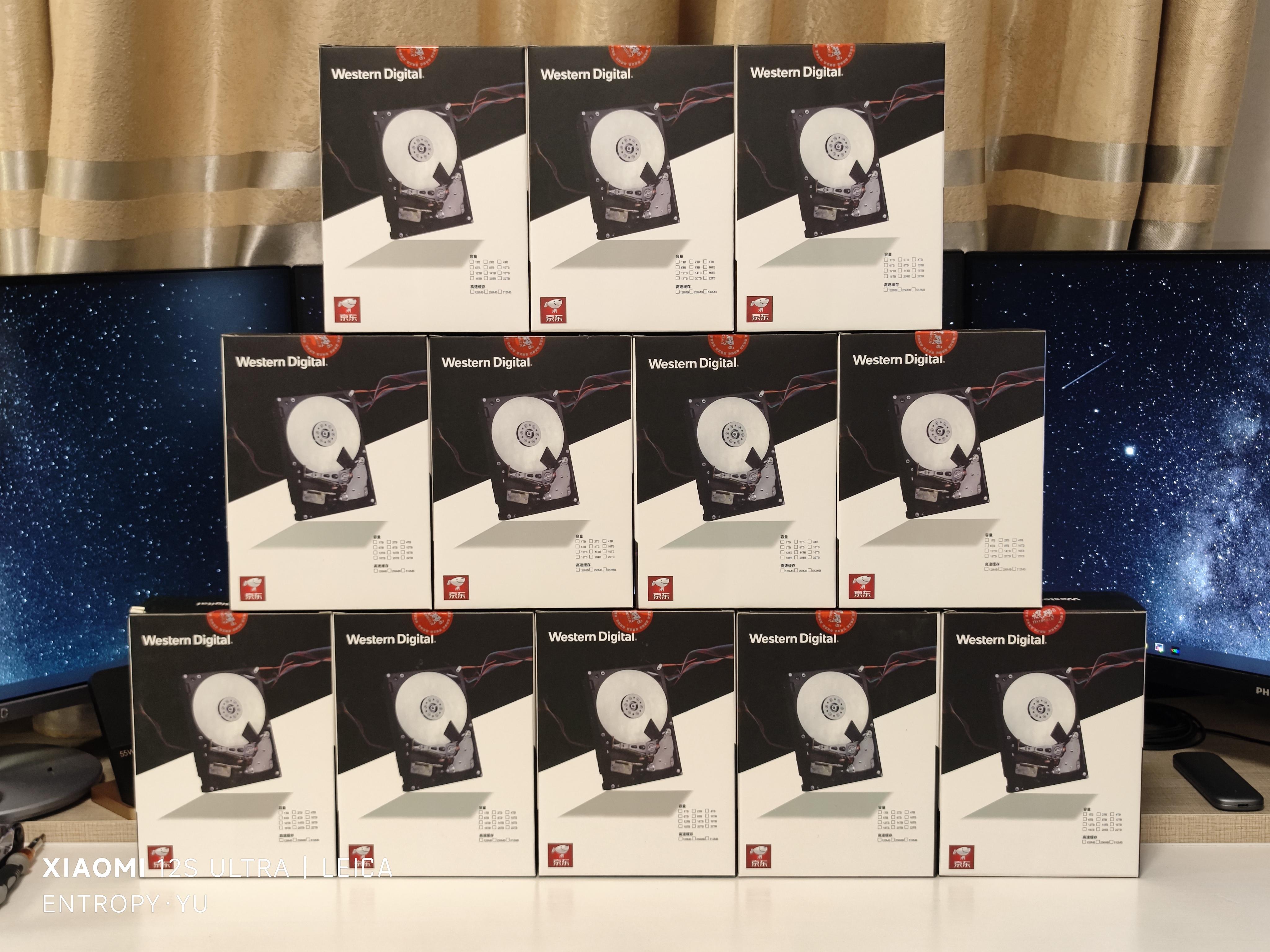

▼给网卡和HBA卡的背面贴上导热垫,和M.2扩展卡的巨大散热片粘在一起,帮助散热

▼事后发现HBA卡温度依然达到了70℃

▼又加了一把TL-C12C

▼考虑到可靠性,给3组HDD分别接了一条大4P电源模组线,但是给系统盘用的SATA电源线没地方接了,只能再从下面拉回一个大4P接口,转接给系统盘供电

▼NAS上层

▼NAS下层

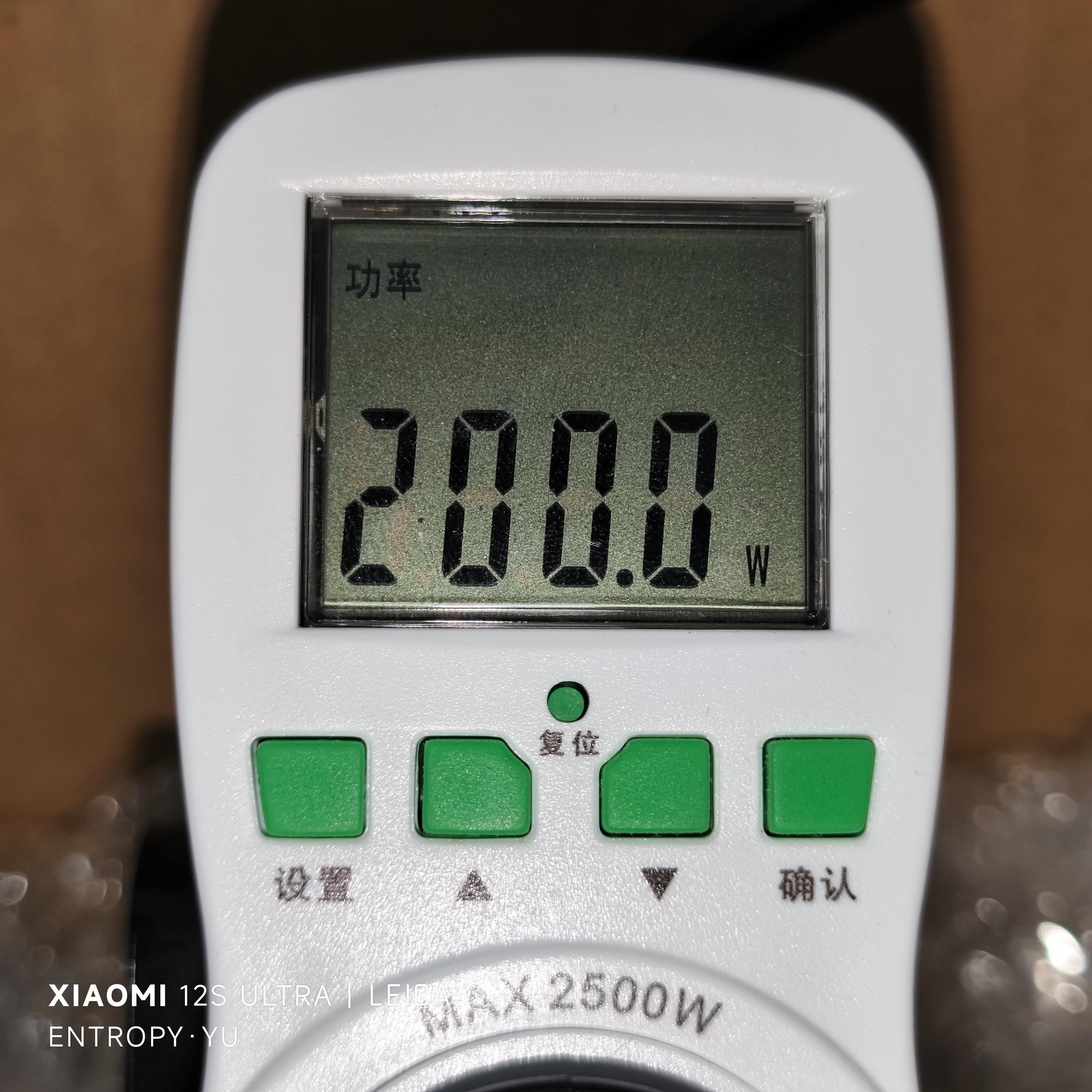

▼待机功率200W,国家电网战略合作伙伴

▼交换机(48*10G SFP+ & 4*40G QSFP)

3 配置过程截图

▼主板架构图

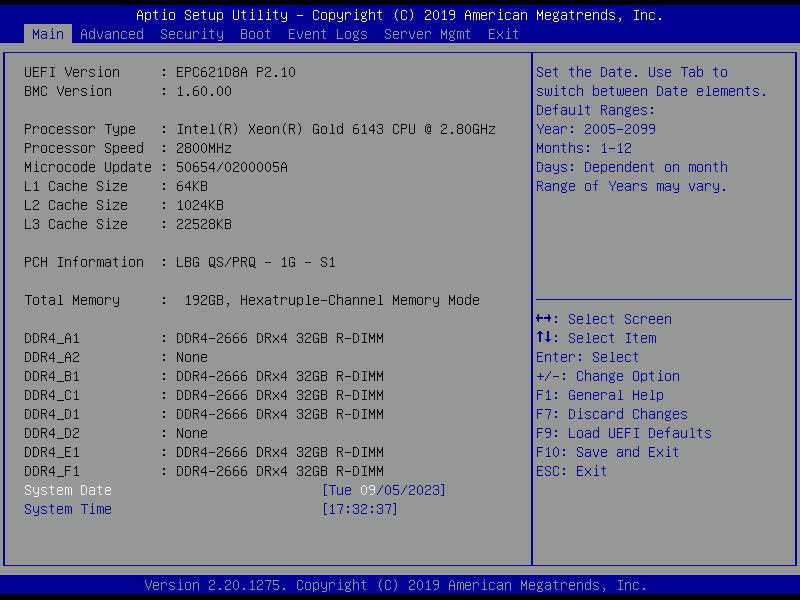

▼BIOS首页

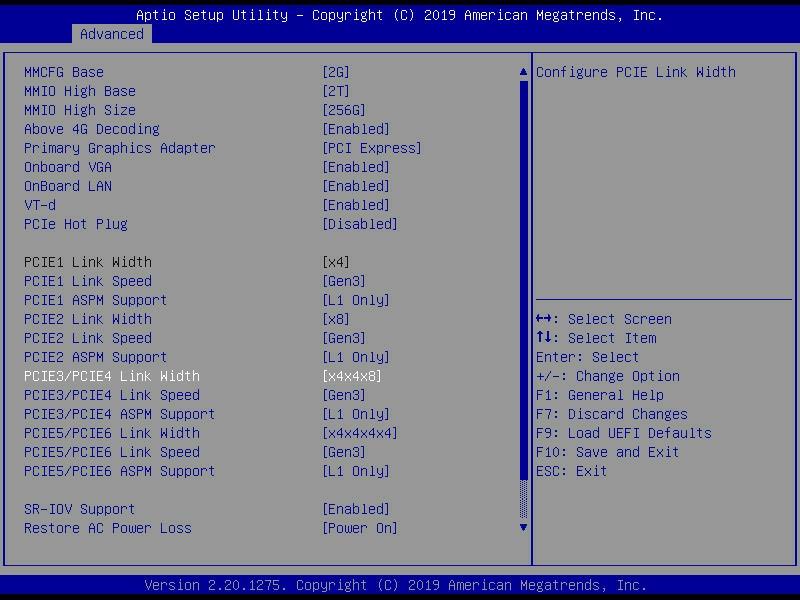

▼设置PCIe拆分和速率参数,速率强制设为Gen 3是因为实测auto的情况下某些SSD偶尔会降速到Gen 2或Gen 1

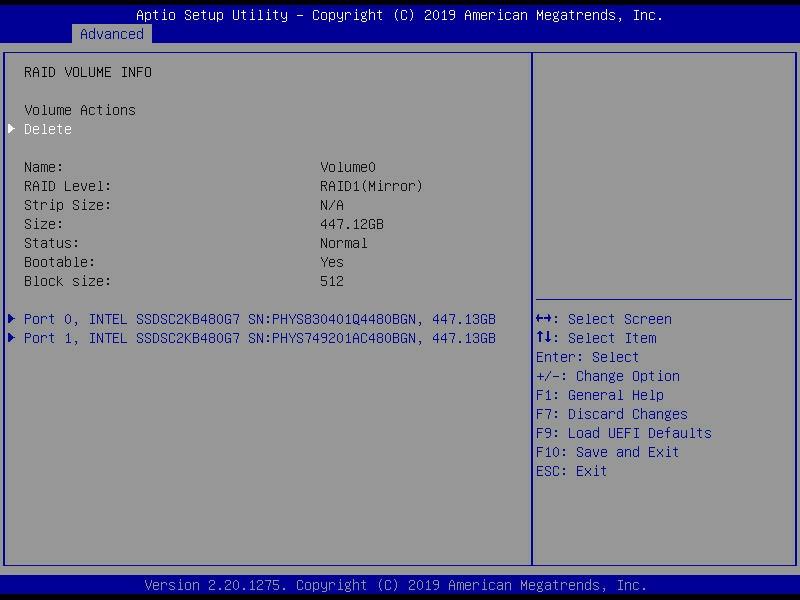

▼在BIOS中为系统盘配置RAID 1

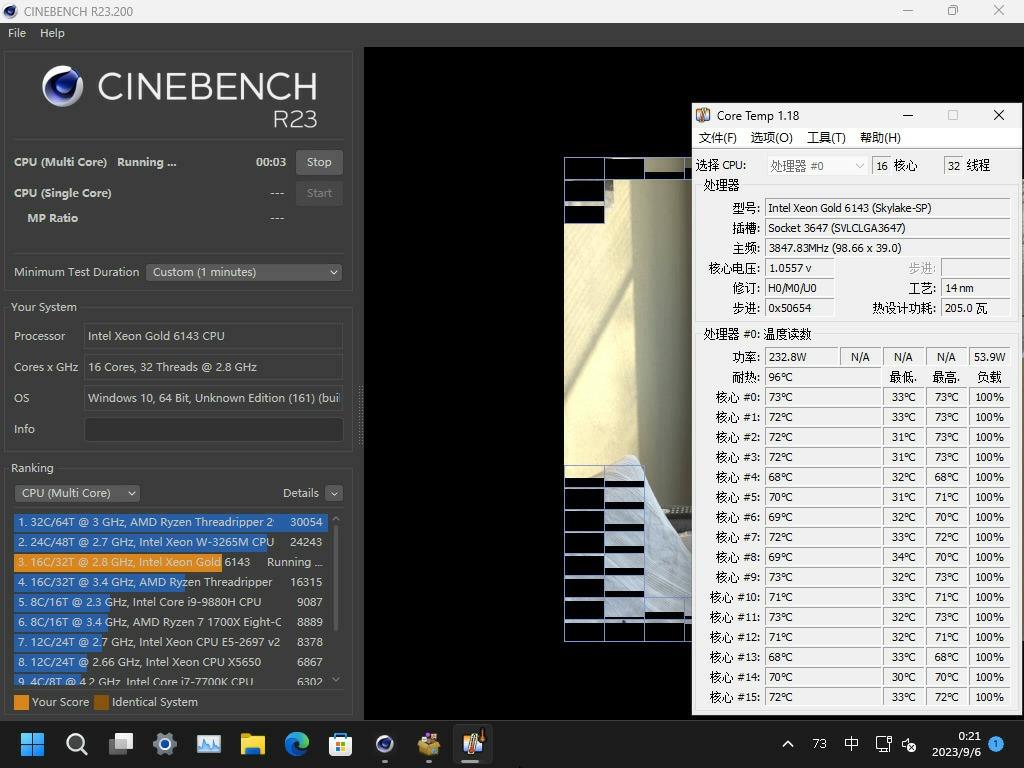

▼先安装Windows跑个分,CPU功率230W,16核3.9GHz,对于第一代Xeon可扩展CPU来说这是很夸张的频率

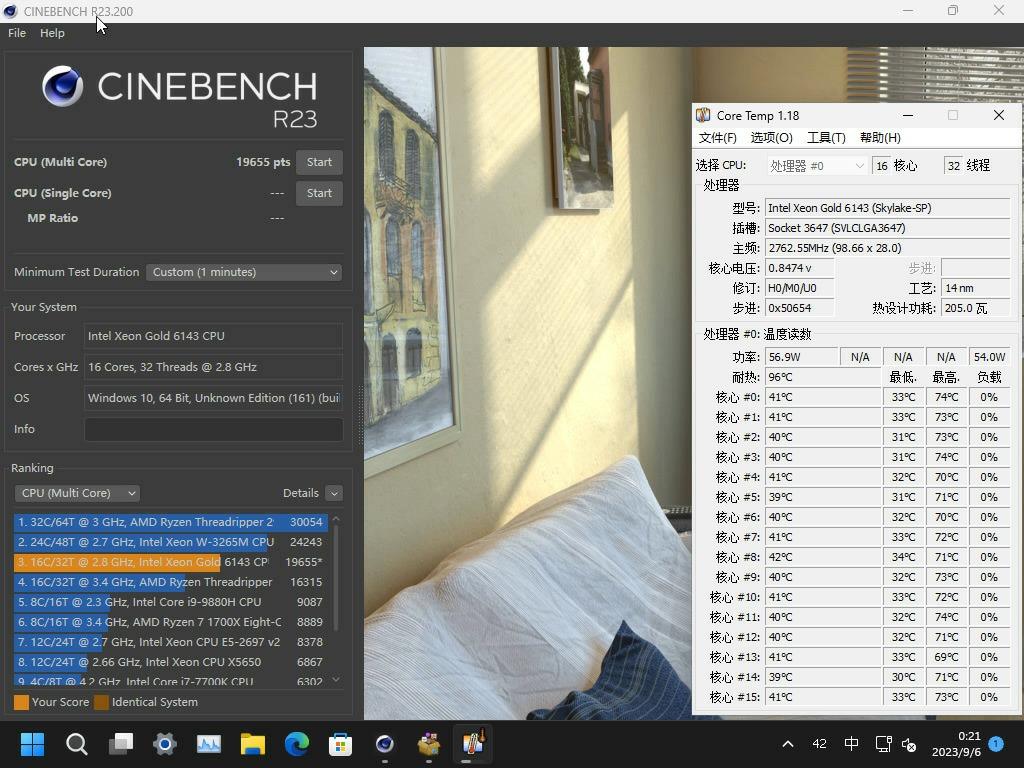

▼R23分数19655pts,AMD Ryzen 7950X的一半

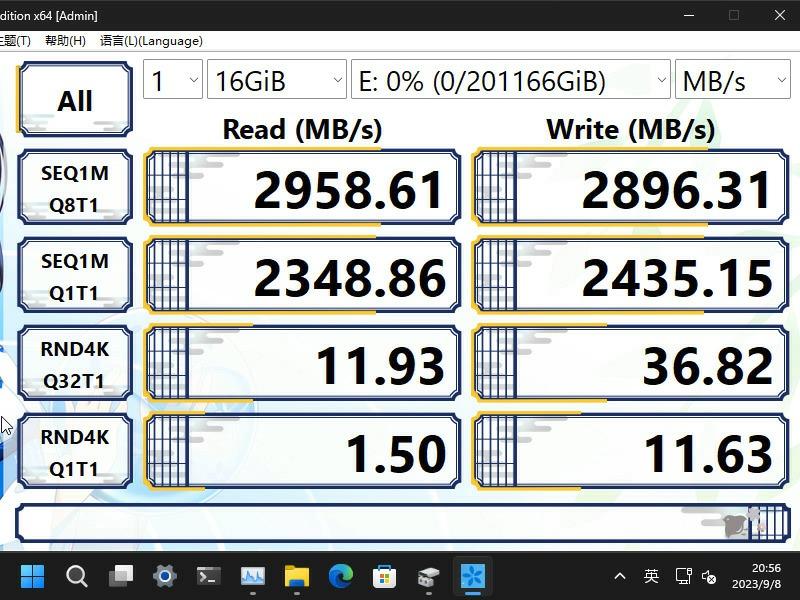

▼12*18TB HC550,Windows带区卷RAID 0

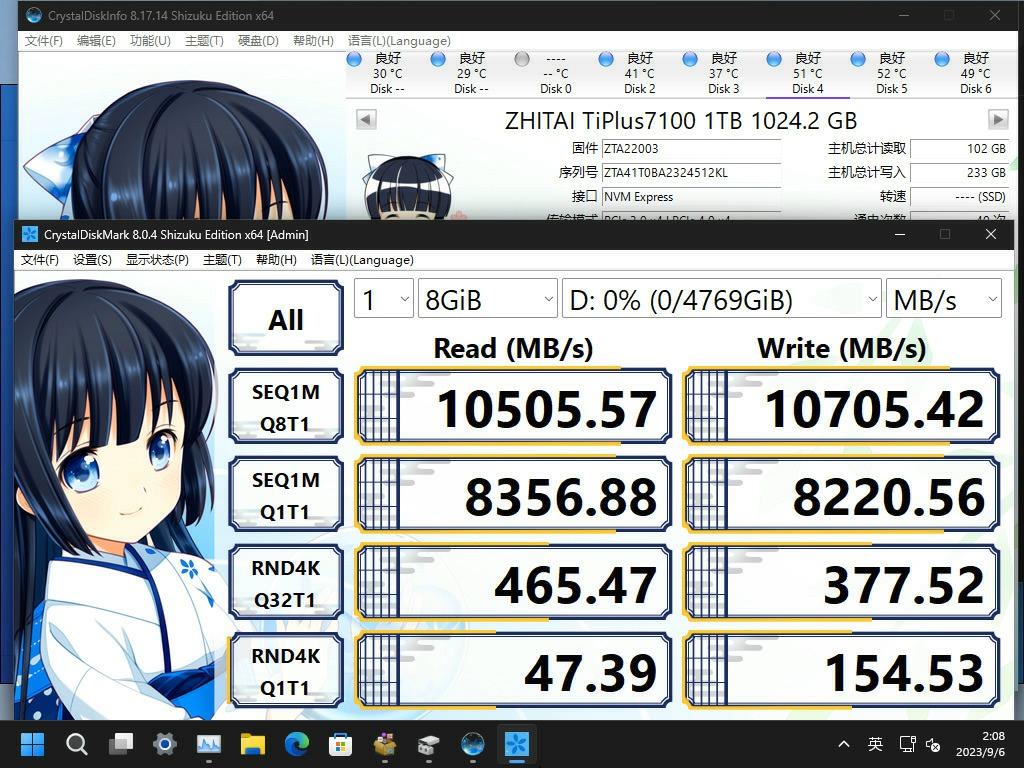

▼5*致态7100 @ PCIe Gen3 x4,Windows带区卷RAID 0

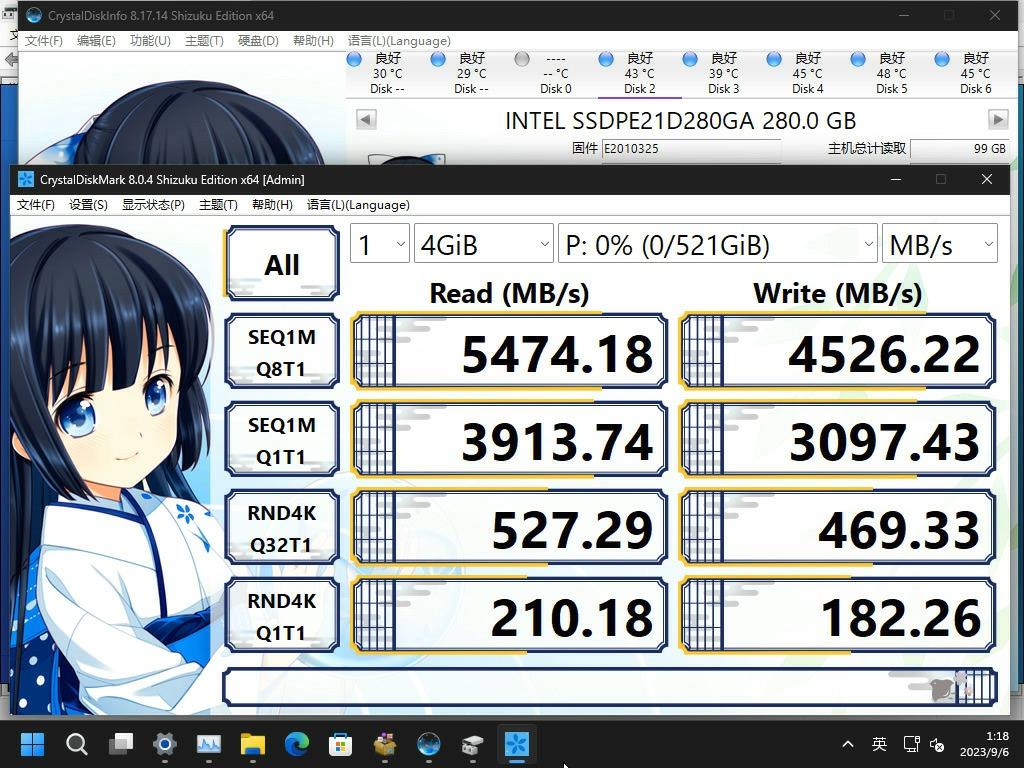

▼2*傲腾900P,Windows带区卷RAID 0

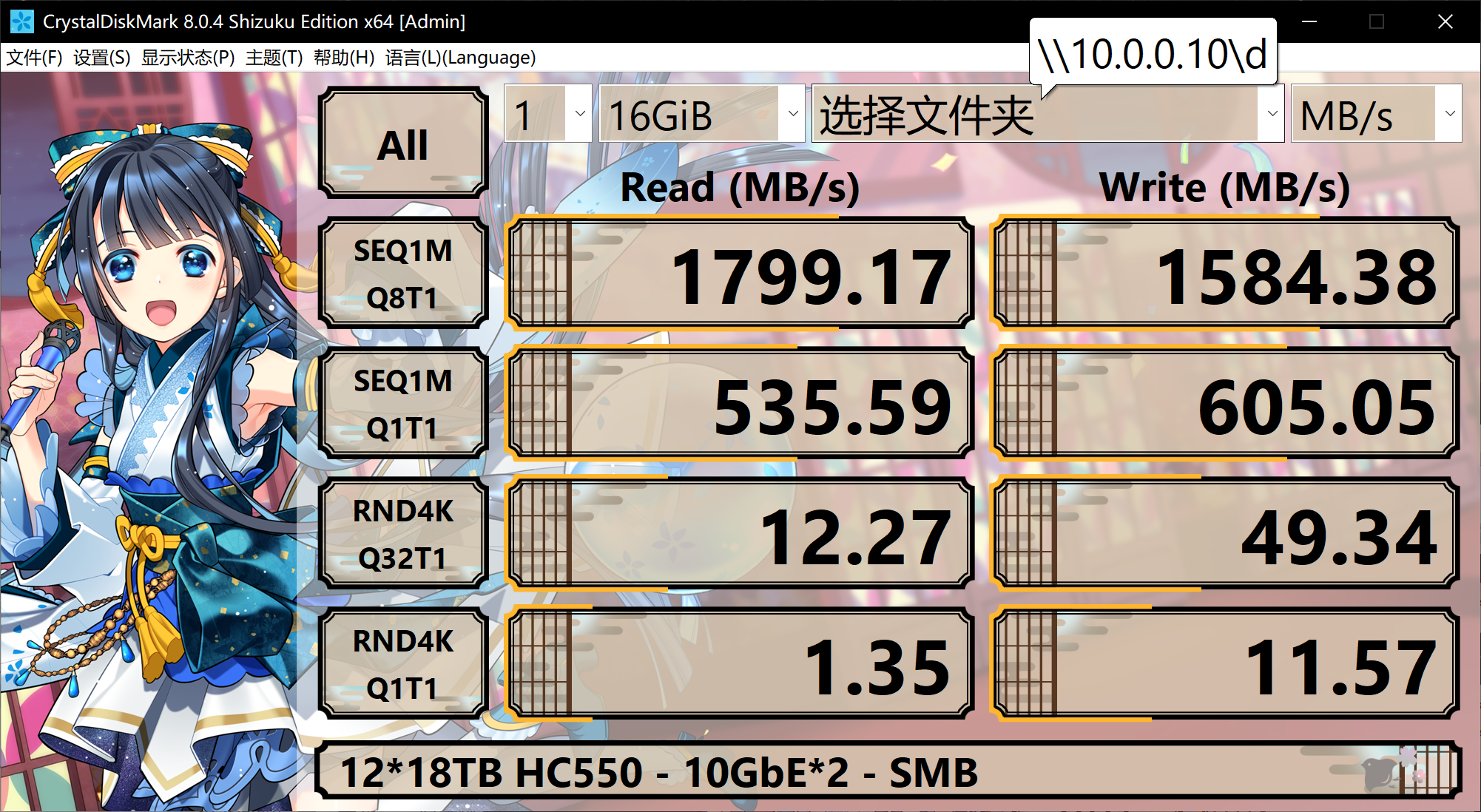

▼Windows SMB,默认启用RDMA,HDD池

▼Windows SMB,默认启用RDMA,傲腾900P

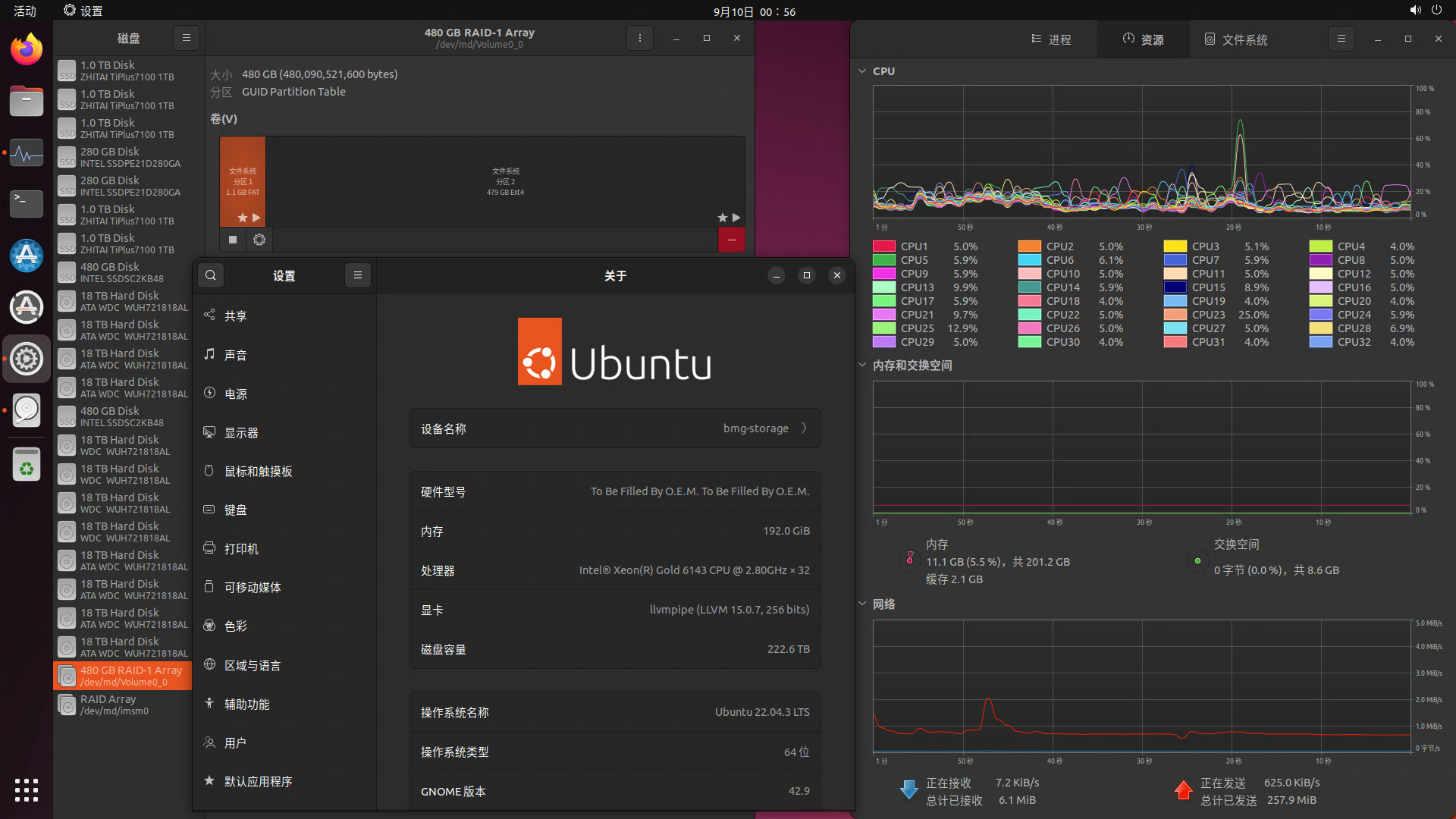

▼Ubuntu Server 22.04.3 LTS,额外安装了Desktop GUI,不要问为什么不一开始就装Desktop版,因为只有Server版才能在安装过程中选择RAID安装

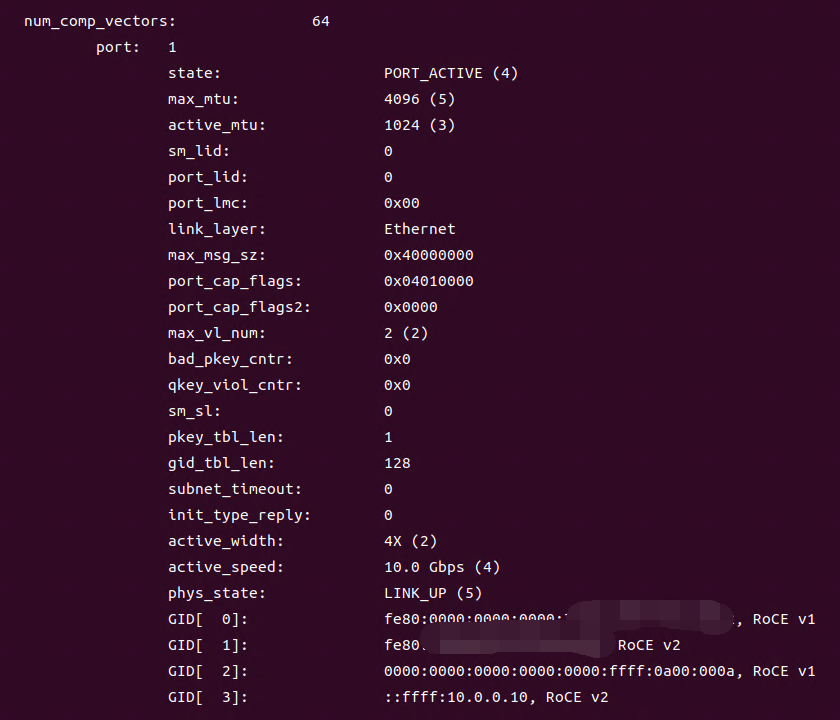

▼配置RDMA,此为配置完后的ibv_devinfo -v命令输出(部分)

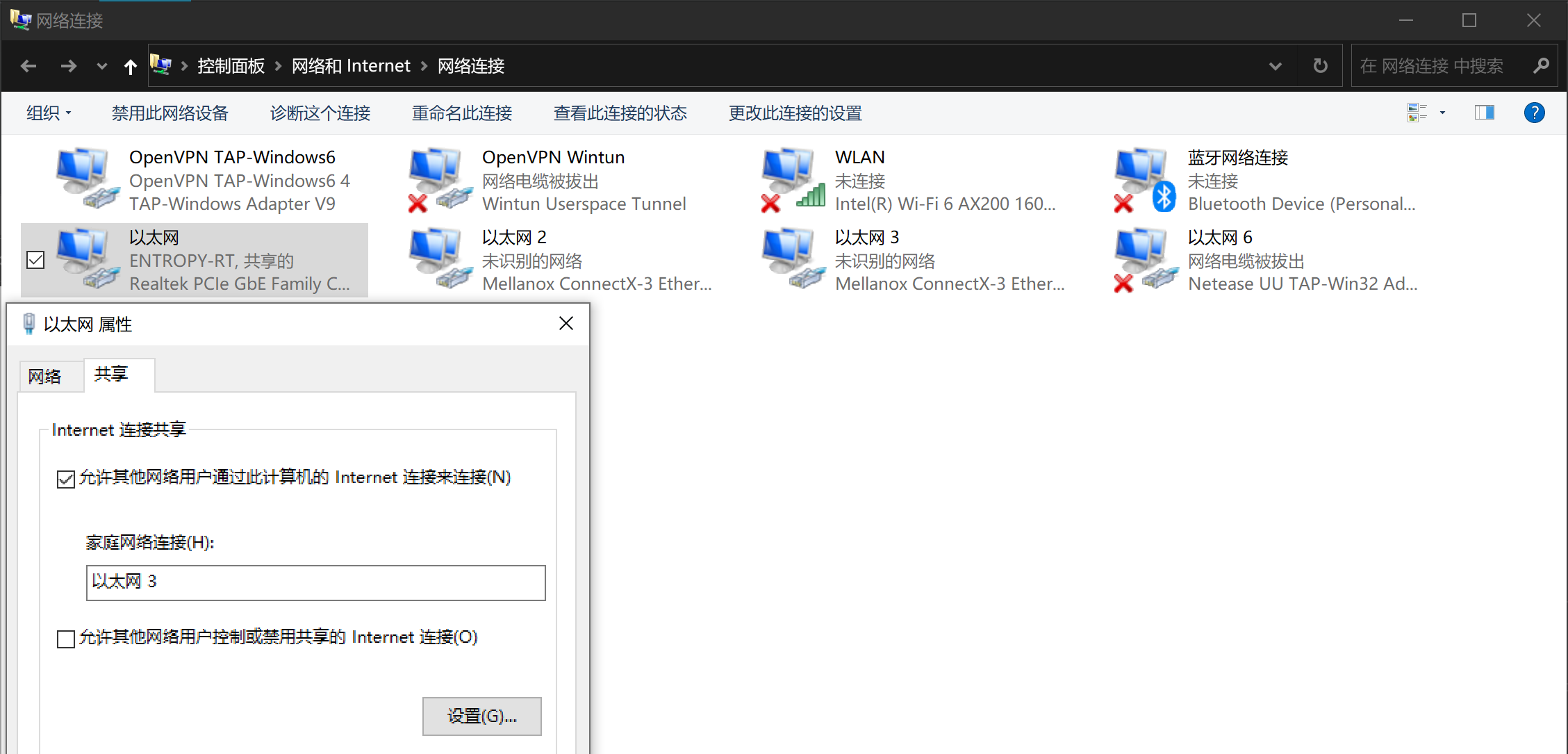

▼利用Windows PC的Internet连接共享功能,即使校园网有web登录认证机制,集群也可以统一接入校园网/互联网(本质上Windows PC充当路由器)

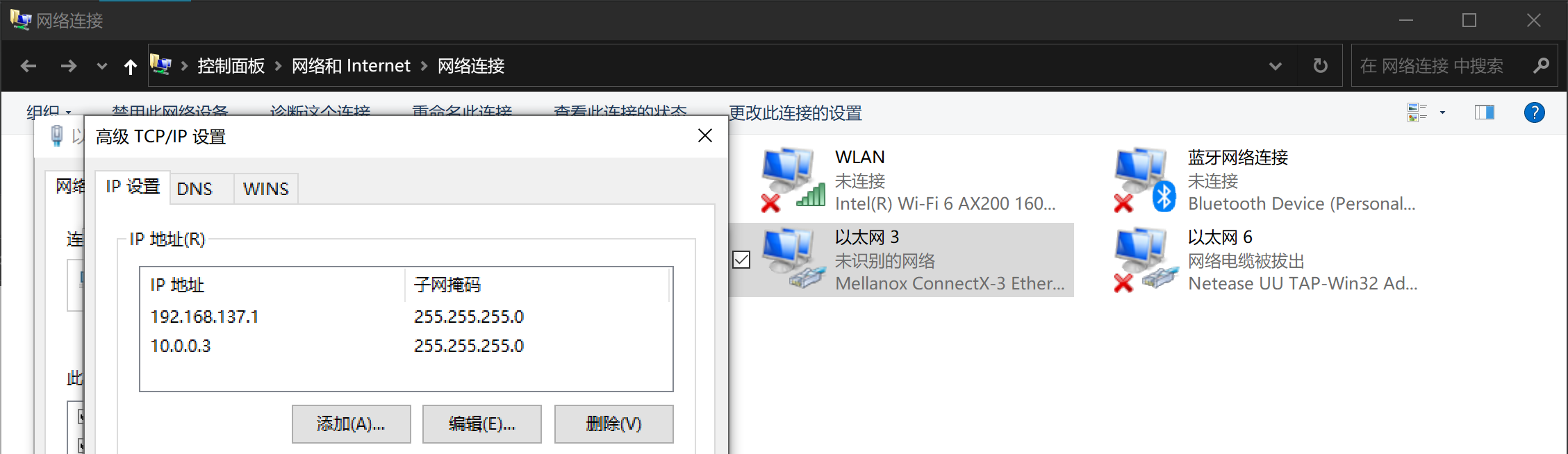

▼给接入集群万兆交换机的网卡添加2个IP地址,使集群子网与Windows PC互通

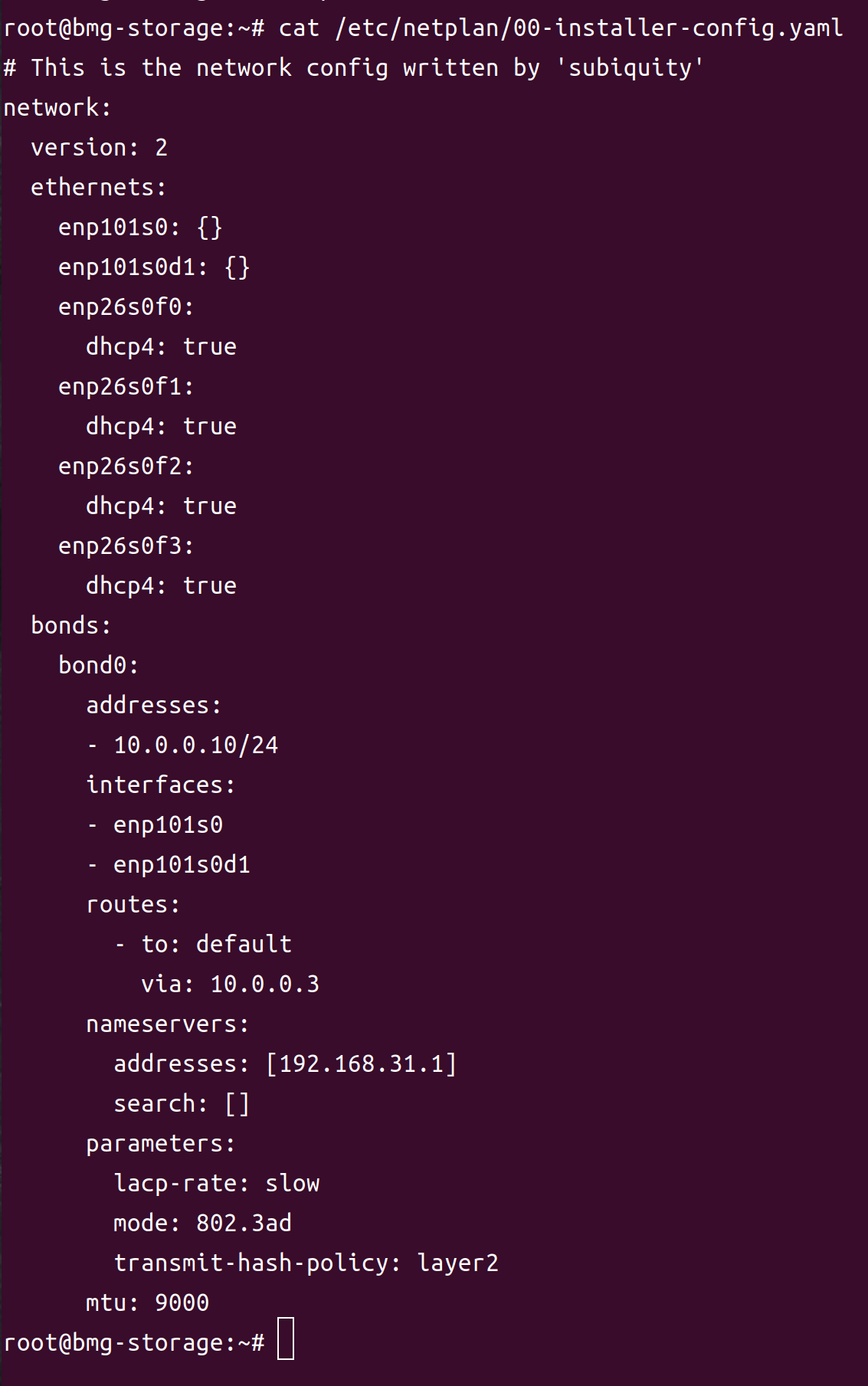

▼netplan网络配置,网关为Windows PC在集群子网中的IP地址(10.0.0.3),DNS与Windows PC相同(192.168.31.1),MTU=9000

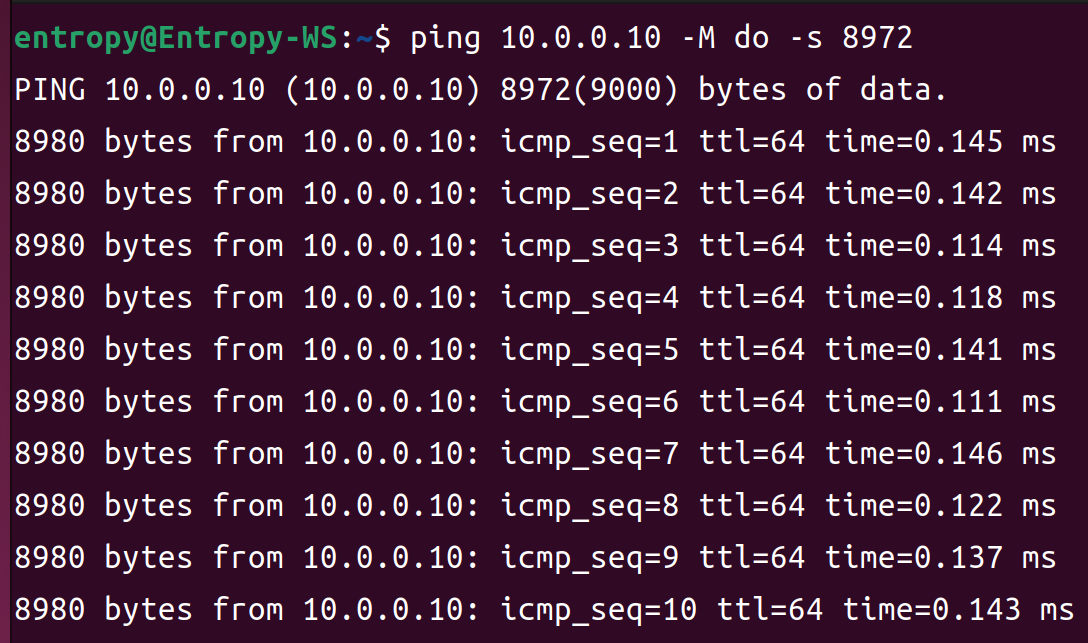

▼使用ping工具确认服务器-交换机-客户端的mtu设置正确

▼创建ZFS pool,此为创建完毕后的zpool status命令输出

4 ZFS粗略调优

ZFS有大量可调的参数,参考官方手册:https://openzfs.github.io/openzfs-docs/Performance%20and%20Tuning/Module%20Parameters.html

笔者调节了以下参数,将其写在了/etc/modprobe.d/zfs.conf文件中,以便系统开机后能自动加载这些参数。

options zfs l2arc_noprefetch=0 options zfs zfs_arc_lotsfree_percent=2 options zfs zfs_arc_max=191106155315 options zfs l2arc_write_boost=10000000000 options zfs l2arc_write_max=10000000000

获得“zfs_arc_max=191106155315”的命令:

grep '^MemTotal' /proc/meminfo|awk '{printf "%d", $2 * 1024 * 0.95}'

应当根据实际情况设置比例参数,现在这台NAS只用于集群NFS,故系统服务占用RAM极少,留5%足够。

以下是父数据集各个property的值(其中atime=off是手动设置的,其余均为默认值):

root@bmg-storage:~# zfs get all NAME PROPERTY VALUE SOURCE bmg_pool0 type filesystem - bmg_pool0 creation 三 9月 10 15:11 2023 - bmg_pool0 used 256G - bmg_pool0 available 157T - bmg_pool0 referenced 256G - bmg_pool0 compressratio 1.00x - bmg_pool0 mounted yes - bmg_pool0 quota none default bmg_pool0 reservation none default bmg_pool0 recordsize 128K default bmg_pool0 mountpoint /bmg_pool0 default bmg_pool0 sharenfs off default bmg_pool0 checksum on default bmg_pool0 compression off default bmg_pool0 atime off local bmg_pool0 devices on default bmg_pool0 exec on default bmg_pool0 setuid on default bmg_pool0 readonly off default bmg_pool0 zoned off default bmg_pool0 snapdir hidden default bmg_pool0 aclmode discard default bmg_pool0 aclinherit restricted default bmg_pool0 createtxg 1 - bmg_pool0 canmount on default bmg_pool0 xattr on default bmg_pool0 copies 1 default bmg_pool0 version 5 - bmg_pool0 utf8only off - bmg_pool0 normalization none - bmg_pool0 casesensitivity sensitive - bmg_pool0 vscan off default bmg_pool0 nbmand off default bmg_pool0 sharesmb off default bmg_pool0 refquota none default bmg_pool0 refreservation none default bmg_pool0 guid 3915595269322674287 - bmg_pool0 primarycache all default bmg_pool0 secondarycache all default bmg_pool0 usedbysnapshots 0B - bmg_pool0 usedbydataset 256G - bmg_pool0 usedbychildren 1.21M - bmg_pool0 usedbyrefreservation 0B - bmg_pool0 logbias latency default bmg_pool0 objsetid 54 - bmg_pool0 dedup off default bmg_pool0 mlslabel none default bmg_pool0 sync standard default bmg_pool0 dnodesize legacy default bmg_pool0 refcompressratio 1.00x - bmg_pool0 written 256G - bmg_pool0 logicalused 256G - bmg_pool0 logicalreferenced 256G - bmg_pool0 volmode default default bmg_pool0 filesystem_limit none default bmg_pool0 snapshot_limit none default bmg_pool0 filesystem_count none default bmg_pool0 snapshot_count none default bmg_pool0 snapdev hidden default bmg_pool0 acltype off default bmg_pool0 context none default bmg_pool0 fscontext none default bmg_pool0 defcontext none default bmg_pool0 rootcontext none default bmg_pool0 relatime off default bmg_pool0 redundant_metadata all default bmg_pool0 overlay on default bmg_pool0 encryption off default bmg_pool0 keylocation none default bmg_pool0 keyformat none default bmg_pool0 pbkdf2iters 0 default bmg_pool0 special_small_blocks 0 default

5 FIO性能测试

PC通过10GbE RoCE挂载NAS NFS共享目录,Sync模式。

64GiB文件,128KiB数据块,顺序写

参数:

[global] directory=/bmg_pool0/home/bmg-admin ioengine=libaio direct=1 thread=1 iodepth=4 log_avg_msec=500 group_reporting [test_64g] bs=128k size=64G rw=write write_bw_log=64g-128k-w write_iops_log=64g-128k-w write_lat_log=64g-128k-w

结果:

test_64g: (g=0): rw=write, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=4 fio-3.28 Starting 1 thread test_64g: Laying out IO file (1 file / 65536MiB) Jobs: 1 (f=1): [W(1)][100.0%][w=1008MiB/s][w=8063 IOPS][eta 00m:00s] test_64g: (groupid=0, jobs=1): err= 0: pid=24277: Fri Oct 20 06:11:39 2023 write: IOPS=7963, BW=995MiB/s (1044MB/s)(64.0GiB/65835msec); 0 zone resets slat (usec): min=3, max=121, avg= 8.19, stdev= 2.05 clat (usec): min=328, max=26687, avg=493.42, stdev=402.36 lat (usec): min=335, max=26694, avg=501.76, stdev=402.37 clat percentiles (usec): | 1.00th=[ 392], 5.00th=[ 416], 10.00th=[ 429], 20.00th=[ 441], | 30.00th=[ 453], 40.00th=[ 461], 50.00th=[ 474], 60.00th=[ 486], | 70.00th=[ 502], 80.00th=[ 519], 90.00th=[ 537], 95.00th=[ 586], | 99.00th=[ 701], 99.50th=[ 775], 99.90th=[ 1876], 99.95th=[ 3490], | 99.99th=[22676] bw ( KiB/s): min=945920, max=1072640, per=100.00%, avg=1019334.48, stdev=32107.36, samples=131 iops : min= 7390, max= 8380, avg=7963.53, stdev=250.88, samples=131 lat (usec) : 500=68.65%, 750=30.74%, 1000=0.36% lat (msec) : 2=0.16%, 4=0.04%, 10=0.01%, 20=0.02%, 50=0.02% cpu : usr=1.48%, sys=7.51%, ctx=518404, majf=0, minf=7 IO depths : 1=0.1%, 2=0.1%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued rwts: total=0,524288,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=4 Run status group 0 (all jobs): WRITE: bw=995MiB/s (1044MB/s), 995MiB/s-995MiB/s (1044MB/s-1044MB/s), io=64.0GiB (68.7GB), run=65835-65835msec

64GiB文件,8KiB数据块,随机读

参数:

[global] directory=/bmg_pool0/home/bmg-admin ioengine=libaio direct=1 thread=1 iodepth=32 log_avg_msec=500 group_reporting [test_64g] bs=8k size=64G rw=randread write_bw_log=64g-8k-randr write_iops_log=64g-8k-randr write_lat_log=64g-8k-randr

结果:

test_64g: (g=0): rw=randread, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=32 fio-3.28 Starting 1 thread Jobs: 1 (f=1): [r(1)][100.0%][r=691MiB/s][r=88.4k IOPS][eta 00m:00s] test_64g: (groupid=0, jobs=1): err= 0: pid=20591: Fri Oct 20 04:57:14 2023 read: IOPS=88.7k, BW=693MiB/s (727MB/s)(64.0GiB/94561msec) slat (nsec): min=900, max=135961, avg=2217.28, stdev=834.14 clat (usec): min=67, max=10804, avg=357.93, stdev=66.35 lat (usec): min=69, max=10806, avg=360.26, stdev=66.33 clat percentiles (usec): | 1.00th=[ 302], 5.00th=[ 326], 10.00th=[ 334], 20.00th=[ 343], | 30.00th=[ 347], 40.00th=[ 351], 50.00th=[ 355], 60.00th=[ 359], | 70.00th=[ 367], 80.00th=[ 371], 90.00th=[ 379], 95.00th=[ 388], | 99.00th=[ 433], 99.50th=[ 506], 99.90th=[ 783], 99.95th=[ 971], | 99.99th=[ 3556] bw ( KiB/s): min=698224, max=717440, per=100.00%, avg=709829.17, stdev=4189.03, samples=189 iops : min=87278, max=89680, avg=88728.66, stdev=523.61, samples=189 lat (usec) : 100=0.01%, 250=0.15%, 500=99.33%, 750=0.40%, 1000=0.07% lat (msec) : 2=0.03%, 4=0.01%, 10=0.01%, 20=0.01% cpu : usr=9.22%, sys=29.43%, ctx=7201569, majf=0, minf=74 IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=100.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0% issued rwts: total=8388608,0,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=32 Run status group 0 (all jobs): READ: bw=693MiB/s (727MB/s), 693MiB/s-693MiB/s (727MB/s-727MB/s), io=64.0GiB (68.7GB), run=94561-94561msec

64GiB文件,8KiB数据块,随机读写(7:3)

参数:

[global] directory=/bmg_pool0/home/bmg-admin ioengine=libaio direct=1 thread=1 iodepth=32 log_avg_msec=500 group_reporting [test_64g] bs=8k size=64G rw=randrw rwmixread=70 write_bw_log=64g-8k-randrw write_iops_log=64g-8k-randrw write_lat_log=64g-8k-randrw

结果:

test_64g: (g=0): rw=randrw, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=32 fio-3.28 Starting 1 thread Jobs: 1 (f=1): [m(1)][99.7%][r=188MiB/s,w=81.3MiB/s][r=24.1k,w=10.4k IOPS][eta 00m:01s] test_64g: (groupid=0, jobs=1): err= 0: pid=20648: Fri Oct 20 05:04:12 2023 read: IOPS=17.9k, BW=140MiB/s (146MB/s)(44.8GiB/328796msec) slat (nsec): min=840, max=158681, avg=2546.54, stdev=842.43 clat (usec): min=95, max=529312, avg=1004.15, stdev=1289.16 lat (usec): min=97, max=529314, avg=1006.83, stdev=1289.18 clat percentiles (usec): | 1.00th=[ 347], 5.00th=[ 437], 10.00th=[ 502], 20.00th=[ 594], | 30.00th=[ 685], 40.00th=[ 766], 50.00th=[ 857], 60.00th=[ 955], | 70.00th=[ 1074], 80.00th=[ 1237], 90.00th=[ 1582], 95.00th=[ 2089], | 99.00th=[ 3294], 99.50th=[ 3785], 99.90th=[ 5669], 99.95th=[11338], | 99.99th=[24511] bw ( KiB/s): min=44368, max=437440, per=99.98%, avg=142836.49, stdev=47055.15, samples=657 iops : min= 5546, max=54680, avg=17854.54, stdev=5881.90, samples=657 write: IOPS=7655, BW=59.8MiB/s (62.7MB/s)(19.2GiB/328796msec); 0 zone resets slat (nsec): min=980, max=184431, avg=2773.43, stdev=896.94 clat (usec): min=324, max=500660, avg=1826.59, stdev=1628.34 lat (usec): min=329, max=500663, avg=1829.50, stdev=1628.36 clat percentiles (usec): | 1.00th=[ 562], 5.00th=[ 873], 10.00th=[ 988], 20.00th=[ 1139], | 30.00th=[ 1270], 40.00th=[ 1401], 50.00th=[ 1549], 60.00th=[ 1713], | 70.00th=[ 1909], 80.00th=[ 2212], 90.00th=[ 2868], 95.00th=[ 3982], | 99.00th=[ 5932], 99.50th=[ 6587], 99.90th=[10028], 99.95th=[16712], | 99.99th=[28443] bw ( KiB/s): min=19184, max=188096, per=99.98%, avg=61227.86, stdev=20123.08, samples=657 iops : min= 2398, max=23512, avg=7653.45, stdev=2515.40, samples=657 lat (usec) : 100=0.01%, 250=0.01%, 500=7.20%, 750=20.12%, 1000=20.87% lat (msec) : 2=40.08%, 4=9.99%, 10=1.66%, 20=0.05%, 50=0.01% lat (msec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.01% cpu : usr=3.49%, sys=9.81%, ctx=6871341, majf=0, minf=35 IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=100.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0% issued rwts: total=5871656,2516952,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=32 Run status group 0 (all jobs): READ: bw=140MiB/s (146MB/s), 140MiB/s-140MiB/s (146MB/s-146MB/s), io=44.8GiB (48.1GB), run=328796-328796msec WRITE: bw=59.8MiB/s (62.7MB/s), 59.8MiB/s-59.8MiB/s (62.7MB/s-62.7MB/s), io=19.2GiB (20.6GB), run=328796-328796msec

256GiB文件,128KiB数据块,随机读

参数:

[global] directory=/bmg_pool0/home/bmg-admin ioengine=libaio direct=1 thread=1 iodepth=32 log_avg_msec=500 group_reporting [test_256g] bs=128k size=256G rw=randread write_bw_log=256g-128k-randr write_iops_log=256g-128k-randr write_lat_log=256g-128k-randr

结果:

test_256g: (g=0): rw=randread, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=32 fio-3.28 Starting 1 thread Jobs: 1 (f=1): [r(1)][100.0%][r=1079MiB/s][r=8630 IOPS][eta 00m:00s] test_256g: (groupid=0, jobs=1): err= 0: pid=22939: Fri Oct 20 05:40:25 2023 read: IOPS=8640, BW=1080MiB/s (1133MB/s)(256GiB/242716msec) slat (usec): min=3, max=169, avg= 9.71, stdev= 4.50 clat (usec): min=412, max=13856, avg=3693.08, stdev=1001.63 lat (usec): min=425, max=13865, avg=3702.94, stdev=1000.39 clat percentiles (usec): | 1.00th=[ 1516], 5.00th=[ 2114], 10.00th=[ 2442], 20.00th=[ 2835], | 30.00th=[ 3130], 40.00th=[ 3359], 50.00th=[ 3654], 60.00th=[ 3982], | 70.00th=[ 4228], 80.00th=[ 4555], 90.00th=[ 5080], 95.00th=[ 5407], | 99.00th=[ 5932], 99.50th=[ 6063], 99.90th=[ 6390], 99.95th=[ 6521], | 99.99th=[ 7898] bw ( MiB/s): min= 1066, max= 1087, per=100.00%, avg=1080.23, stdev= 3.61, samples=485 iops : min= 8528, max= 8702, avg=8641.84, stdev=28.90, samples=485 lat (usec) : 500=0.01%, 750=0.04%, 1000=0.15% lat (msec) : 2=3.79%, 4=57.10%, 10=38.92%, 20=0.01% cpu : usr=1.00%, sys=8.78%, ctx=330845, majf=0, minf=1041 IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=100.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0% issued rwts: total=2097152,0,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=32 Run status group 0 (all jobs): READ: bw=1080MiB/s (1133MB/s), 1080MiB/s-1080MiB/s (1133MB/s-1133MB/s), io=256GiB (275GB), run=242716-242716msec

256GiB文件,128KiB数据块,随机读写(7:3)

参数:

[global] directory=/bmg_pool0/home/bmg-admin ioengine=libaio direct=1 thread=1 iodepth=32 log_avg_msec=500 group_reporting [test_256g] bs=128k size=256G rw=randrw rwmixread=70 write_bw_log=256g-128k-randrw write_iops_log=256g-128k-randrw write_lat_log=256g-128k-randrw

结果:

test_256g: (g=0): rw=randrw, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=32 fio-3.28 Starting 1 thread Jobs: 1 (f=1): [m(1)][100.0%][r=905MiB/s,w=390MiB/s][r=7242,w=3122 IOPS][eta 00m:00s] test_256g: (groupid=0, jobs=1): err= 0: pid=9767: Fri Oct 20 03:05:34 2023 read: IOPS=7041, BW=880MiB/s (923MB/s)(179GiB/208452msec) slat (usec): min=3, max=146, avg= 8.76, stdev= 3.40 clat (usec): min=622, max=2246.6k, avg=3255.70, stdev=9227.68 lat (usec): min=639, max=2246.6k, avg=3264.62, stdev=9227.64 clat percentiles (usec): | 1.00th=[ 1663], 5.00th=[ 2057], 10.00th=[ 2245], 20.00th=[ 2507], | 30.00th=[ 2704], 40.00th=[ 2900], 50.00th=[ 3130], 60.00th=[ 3359], | 70.00th=[ 3589], 80.00th=[ 3851], 90.00th=[ 4178], 95.00th=[ 4424], | 99.00th=[ 5014], 99.50th=[ 5276], 99.90th=[10945], 99.95th=[11863], | 99.99th=[20055] bw ( KiB/s): min= 1795, max=945920, per=100.00%, avg=910253.63, stdev=90889.92, samples=412 iops : min= 14, max= 7390, avg=7111.34, stdev=710.07, samples=412 write: IOPS=3019, BW=377MiB/s (396MB/s)(76.8GiB/208452msec); 0 zone resets slat (usec): min=3, max=155, avg=10.69, stdev= 3.60 clat (usec): min=458, max=1350.0k, avg=2972.16, stdev=7312.86 lat (usec): min=473, max=1350.1k, avg=2983.00, stdev=7312.81 clat percentiles (usec): | 1.00th=[ 1450], 5.00th=[ 1860], 10.00th=[ 2057], 20.00th=[ 2278], | 30.00th=[ 2474], 40.00th=[ 2638], 50.00th=[ 2802], 60.00th=[ 2999], | 70.00th=[ 3228], 80.00th=[ 3523], 90.00th=[ 3884], 95.00th=[ 4178], | 99.00th=[ 4817], 99.50th=[ 5276], 99.90th=[12256], 99.95th=[12780], | 99.99th=[17695] bw ( KiB/s): min= 1026, max=423680, per=100.00%, avg=390324.98, stdev=40178.99, samples=412 iops : min= 8, max= 3310, avg=3049.39, stdev=313.90, samples=412 lat (usec) : 500=0.01%, 750=0.01%, 1000=0.05% lat (msec) : 2=5.36%, 4=81.79%, 10=12.60%, 20=0.18%, 50=0.01% lat (msec) : 100=0.01%, 250=0.01%, 500=0.01%, 1000=0.01%, 2000=0.01% lat (msec) : >=2000=0.01% cpu : usr=1.93%, sys=9.41%, ctx=600889, majf=0, minf=25 IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=100.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0% issued rwts: total=1467743,629409,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=32

6 整活

强行在这台NAS上安装了2片TITAN V,由于一颗650W电源不够用,又掏出了另一颗在床头柜上吃灰3年的电源。利用这套诡异的配置测试了TITAN V跑ReaxFF模拟的性能,测试结果将在未来单独发布。

文章评论